The inside story of the JUP airdrop at Phantom

This blog post was originally written by The Phantom Team. You can find the original post on their blogspot at the following link: https://phantom.app/learn/developers/jup-airdrop-at-phantom

As the largest DEX aggregator on Solana, Jupiter shook up the crypto world when it announced its JUP token at Solana Breakpoint 2023. It was clear that this wouldn’t just be one of the biggest airdrops on Solana, but on any blockchain ever.

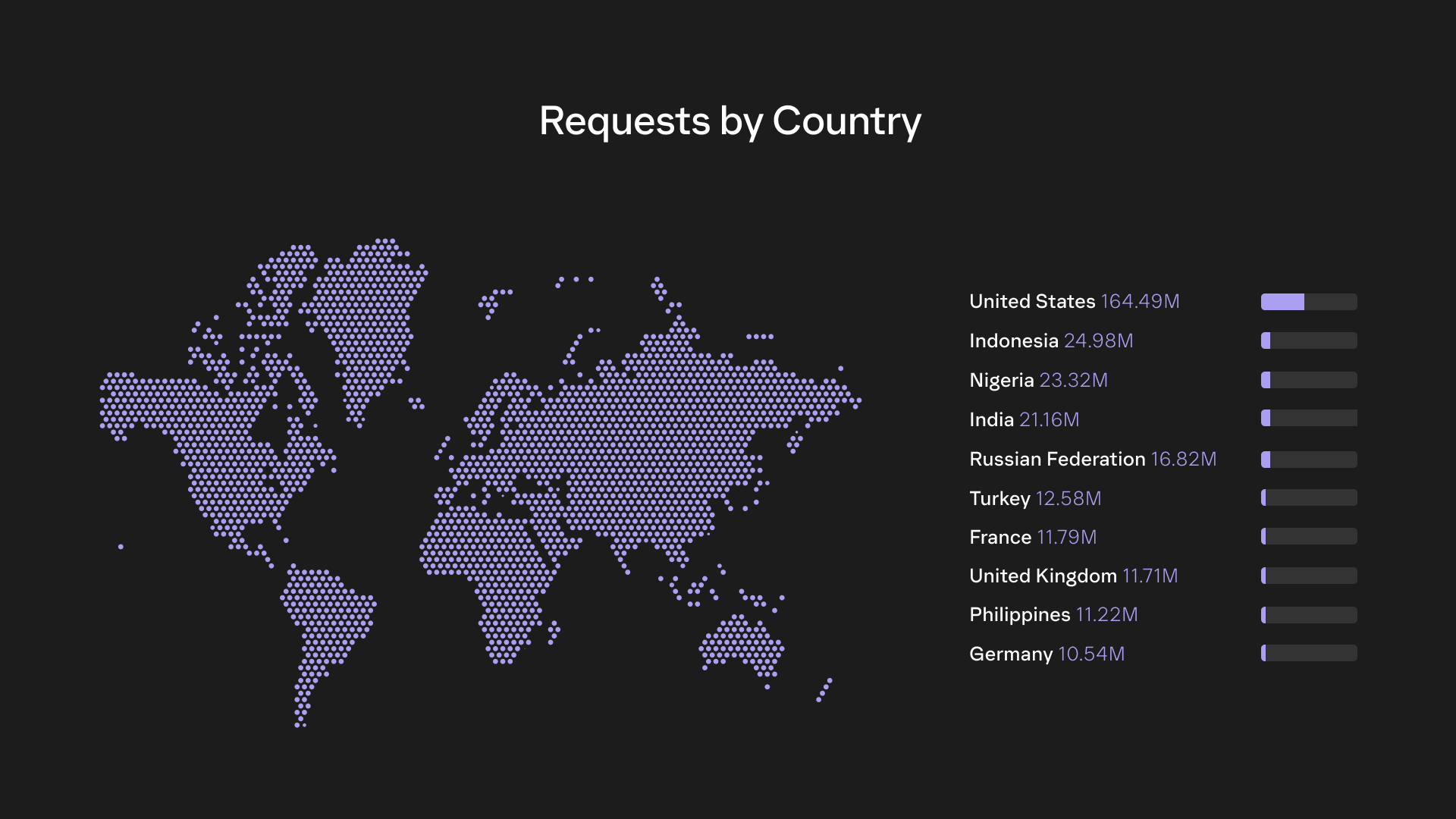

The JUP airdrop would be a significant liquidity event for Solana, as Jupiter was slated to distribute nearly $700 million worth of JUP tokens to its users. With JUP tokens being distributed to nearly one million wallets, it would also be a massive test for Phantom, our infrastructure, and the Solana network.

While our team had experience helping ensure smooth airdrops for PYTH and JTO, JUP would be an order of magnitude bigger. We knew it would be a monumental task, but we were determined to make it work. And we did.

Although it required a herculean effort from our giga-brain Engineering team and our partners, we successfully pulled off this massive airdrop. And now, with it behind us, we thought it would be worth sharing a behind-the-scenes look into what happened, what we learned, and what lies ahead.

Let’s dive in.

Learning from WEN

January 25, 2023

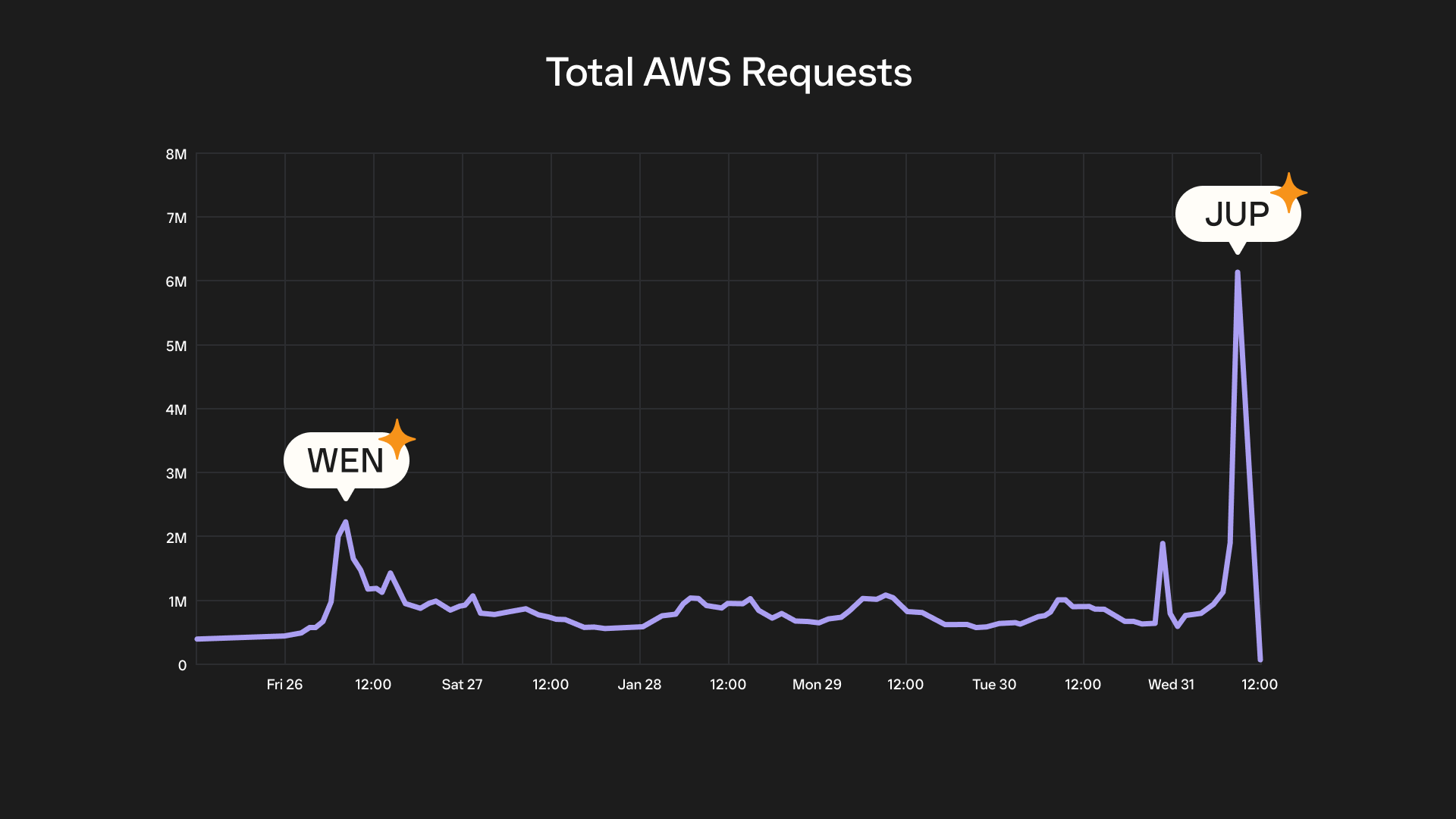

As we were preparing for the JUP airdrop, Jupiter’s founder announced the WEN token, a community coin based on a fractionalized NFT and the first token launch on Jupiter’s LFG launchpad. For this impromptu airdrop, more than one million wallets were eligible.

This would be our first stress test. And it was set to launch in 24 hours. So, we began collaborating with our exceptional partners who help power Phantom:

- Allium: Enterprise on-chain data analytics

- QuickNode and Triton: RPC infrastructure

- Blowfish: Wallet security

January 26, 2023

The WEN airdrop went live at 15:00 UTC. By 15:04 UTC we started to see a surge in activity. While we were able to help hundreds of thousands of users claim their airdrop, the massive usage overloaded our data infrastructure with too many concurrent connections.

- At peak, we experienced 8K requests per second (RPS).

- This was roughly 8x the PYTH airdrop and 20x the number of users we saw during the JTO airdrop.

- This resulted in our some of our endpoints going down (all API Gateway / lambdas).

So, what happened? Several factors contributed to this issue.

The huge spike in traffic exhausted our available connections to our database. This resulted in timeouts when attempting to connect to Allium, leading to over 20,000 concurrent Lambda executions. We then raised our concurrent execution limit to 30,000. Eventually, Allium increased its limits, and we re-deployed our Lambdas to clear idle connections, but problems persisted.

After further investigation, we discovered that we had reached the limit of simultaneous connections through our NAT Gateway. In essence, our services reached the default AWS limit for simultaneous network connections. It seems these connections, or at least ports, remained in use even after the lambdas were cleaned up, extending the duration of the outage. This eventually resolved itself over time.

While we did our best to prepare, this was a great learning lesson ahead of JUP.

Preparing for JUP

January 27, 2023

With only four days until the airdrop, and plenty of work to do, we swung into action. First, we assembled a dedicated JUP airdrop squad. Members included Ahmad, Carlo Licciardi, Congkai Sun, Dries Croons, Francesco Agosti, Kenji Chao, Mitchell Catoen, Raj, and Ricardo Pinho from Phantom, with additional support from Chenghan, Claudia, and Herbert from Allium.

With this core team assembled, we then coordinated load tests with other key partners.

- Quicknode and Triton needed to be capable of handling the highest loads as they power Phantom's sends, in-wallet swaps, and numerous other functions such as retrieving the status of the JUP claim transaction after submission.

- Both providers conducted independent external load tests and confirmed the capacity to handle 25K RPS per node across three machines in three different clusters. This gave us a total capacity of 75K RPS per service.

- Blowfish needed to be able to simulate every dapp interaction and claim transaction.

- To manage this, Blowfish allocated additional machines and resources. They also conducted a load test to handle a maximum of 25K RPS for transaction simulations that are triggered when the dapp connection modal appears in the wallet.

All together we dedicated our entire weekend (with little sleep) to organizing, preparing, and testing our infrastructure:

- Allium collaborated with us to create a new database for EVM Queries, which powers our Activity Tab.

- We performed 16 load tests on our staging environment and two on our production environment. These tests spanned six endpoints and utilized two Allium database backups—one for BTC and one for EVM history. This was to ensure our providers for other chains wouldn’t impact Solana performance.

- We began with a low load of 1K RPS and gradually increased it to 30K across 10 machines. This was to ensure we were testing at least four times the traffic we received during the WEN airdrop.

- Both our RPC partners, Triton and Quicknode, independently conducted external load tests. They confirmed their ability to handle 25L RPS per node across three machines in three different clusters. This equates to a total of 75K RPS per service.

- We split the JUP airdrop traffic evenly, 50/50, between both of our RPC Providers. We also used Quicknode for EVM.

- We added an extra 8 IP addresses (1 primary and 7 secondary) to our NAT gateway. This allows us to increase the number of ports for external connections from our AWS infrastructure. As a result, the port limit has been raised from 55K to 440K.

- We've adjusted all the lambda timeouts to 10 seconds, down from the previous default of 30 seconds. This change aimed to trigger a retry request from the client in instances of a database connection hangup or if our RPC provider takes too long to respond.

- Finally, we implemented Datadog alerts for Port Allocation Errors and Idle Timeouts to ensure we receive notifications for any hanging connections to external service providers.

This work continued until the JUP airdrop went live on January 31 at 10AM PST.

JUP Launch day

January 27, 2024

At 9AM PST, one hour before the airdrop, we established a war room. Adopting a military structure, we assigned a commander and points of contact for each service where we anticipated high traffic: Tokens, History, Banners, AWS Dev Ops, Cloudflare Security, Triton, QuickNode, and Blowfish.

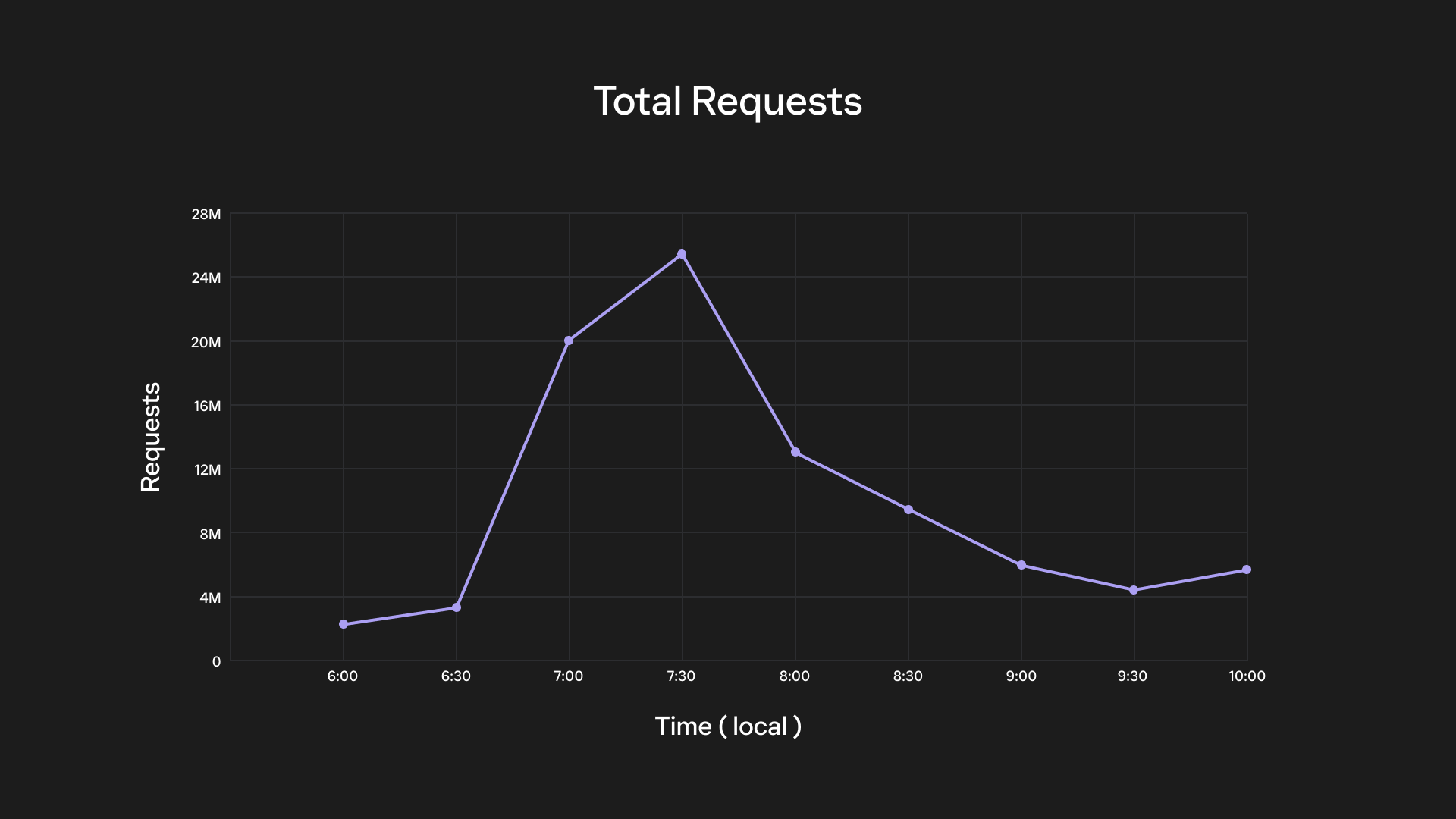

At 10AM EST, the airdrop went live, and we immediately experienced the highest network traffic we’ve ever seen.

- 90,000 requests per second (RPS) at peak

- 477,000,000 total requests over ~4 hours

- 3x more traffic than the $WEN airdrop

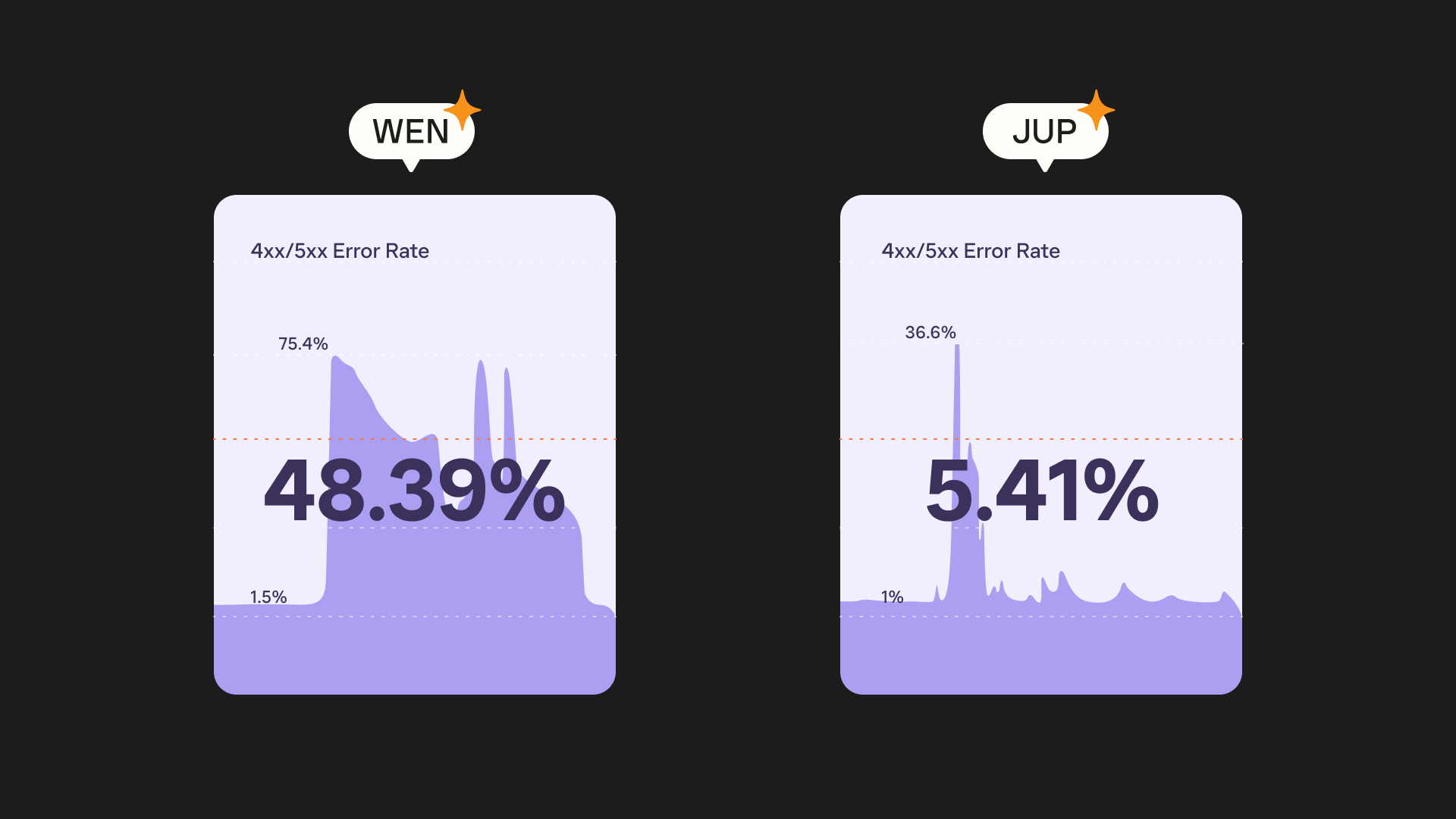

Despite this overwhelming surge, we delivered much better performance this time around.

Compared to the WEN airdrop, we:

- Served traffic 6x quicker

- Experienced 10x fewer errors

- Delivered 6x quicker p95 response time despite seeing 10x the traffic

In addition to improved infrastructure, our team's real-time problem solving also helped ensure smooth operations:

- When we observed some minor database locks on Allium, we were able to address them in real-time, thanks to the quick thinking of Allium’s experienced engineers. Their clever solution of changing the root password on the databases released the held up connections.

- While we didn’t add Priority Fees on the claim transaction (Jupiter applied them by default, which we respected, and users could customize them in the claim app), we were able to control Priority Fees for other internal transactions in the wallet from our backend without having to change client code and release them. Huge shout out to Anvit on the Phantom team for making this happen!

- We split RPC Traffic between two RPC Providers to balance out the load. We were ready and able to configure this balance anytime we noticed issues in any of the providers.

- With shorter timeouts on the backend when making calls to external providers, clients could fail early and rely on the retry logic rather than causing bottlenecks.

Around two hours after JUP's launch, traffic began to normalize, and we breathed a collective sigh of relief. Despite four consecutive days of long hours and little sleep, we made it through relatively unscathed and successfully helped hundreds of thousands of users claim their well-deserved JUP tokens.

Learnings and takeaways

Despite minor setbacks, we’re proud to have helped hundreds of thousands of users during such a monumental moment. But this wouldn't have been possible without the combined efforts of Allium, Triton, QuickNode, Blowfish, and our very own Phantom developers. Together, we demonstrated that Solana is the most scalable and battle-tested ecosystem in crypto. And it’s all thanks to the collaborative and cooperative spirit of our wonderful developer community.

With the JUP airdrop, we learned a ton about our infrastructure and ways to improve it. Here are some of our observations and key takeaways:

What went well

- Our extensive preparation through load testing really paid off, and we were able to graciously handle more traffic than we anticipated.

- Our war room coordination was flawless. Everyone was engaged, equipped with appropriate dashboards, and prepared to take action if necessary.

- We configured appropriate WAF rules in Cloudflare to ward off DDOS attacks.

- We had enough tools in our back pocket to mitigate issues on the fly

What could have gone better

- Lockups during the load test could’ve been debugged better.

- Load testing for token prices could’ve been more extensive (less common tokens, more tokens at once).

- Better anticipation of Priority Fee increases.

- Our database queries could’ve been more optimized.

Learnings and future best practices

- Establish redundancies for the databases we share with Allium.

- Always have smart routing of RPC traffic in place should one start giving up.

- Ensure our AWS Lambda concurrency limits are appropriately set according to the expected traffic. The same limit may not apply for every other airdrop.

- Load test all new services that we introduce in the future and make sure our staging environment always mirrors production as closely as possible.

- Make sure API calls related to other chains do not affect Solana features

- Ensure the queries to Allium are as optimized as possible

Now, that we’re past it, we’d like to congratulate our partners, developers, users, and Jupiter Exchange for contributing to this monumental moment for Solana!

See you at the next drop 🪂

Discover the Latest Trends

Stay informed with our latest blog posts

Stay Updated with Our Blog

Get the latest blog updates directly to your inbox.

.gif)